PRACTICAL 1

Practical 1

AIM :

Data preprocessing in python using scikit learn

THEORY:

Data Preprocessing

• Pre-processing refers to the transformations applied to our data before feeding it to the algorithm.

• Data Preprocessing is a technique that is used to convert the raw data into a clean data set. In other words, whenever the data is gathered from different sources it is collected in raw format which is not feasible for the analysis.

Need of Data Preprocessing

• For achieving better results from the applied model in various projects, the format of the data has to be in a proper manner. Some specified Machine Learning model needs information in a specified format, for example, Random Forest algorithm does not support null values, therefore to execute random forest algorithm null values have to be managed from the original raw data set.

• Another aspect is that data set should be formatted in such a way that more than one Machine Learning and Deep Learning algorithms are executed in one data set, and best out of them is chosen.

Data pre-processing techniques:

- Standardization:

Standardization (or Z-score normalization) is the process where the features are rescaled so that they’ll have the properties of a standard normal distribution with μ=0 and σ=1, where μ is the mean (average) and σ is the standard deviation from the mean.

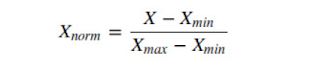

- Normalization:

The aim of normalization is to change the values of numeric columns in the dataset to a common scale, without distorting differences in the ranges of values.

- Label Encoding:

Label Encoding refers to converting the labels into numeric form so as to convert it into the machine-readable form. Machine learning algorithms can then decide in a better way on how those labels must be operated. It is an important pre-processing step for the structured dataset in supervised learning.

- Discretization:

Discretization refers to the process of converting or partitioning continuous attributes, features or variables to discretized or nominal attributes/features/variables/intervals.

- Imputation:

Imputation is the process of replacing missing data with substituted values. Because missing data can create problems for analyzing data, imputation is seen as a way to avoid pitfalls involved with listwise deletion of cases that have missing values.

Dataset Description:

This database was created to identify a voice as male or female, based upon acoustic properties of the voice and speech. The dataset consists of 3,168 recorded voice samples, collected from male and female speakers.

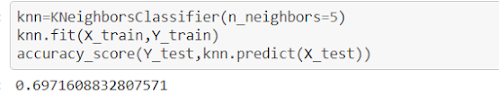

Applying KNN before pre-processing techniques:

Task 1: Standardization

Task 2: Normalization

QUESTION/ANSWER:

1. How to decide variance threshold in data reduction?

The variance threshold calculation depends on the probability density function of a particular distribution.

2. Does the output result same even after applying model on encoded data v\s original data? If yes/no, explain with valid reason.

No, before applying preprocessing techniques the accuracy was 69.71% and after applying preprocessing techniques the accuracy varied as previously features might have larger order of variance which would dominate on the objective function. So we perform preprocessing so that the feature with lesser significance might not end up dominating the objective function due to its larger range. In addition, features having different unit should also be scaled thus providing each feature equal initial weightage and at the end we will have a better prediction model.

After standardization - accuracy = 50.15%

After normalization - accuracy = 96.68%

REFERENCES:

- https://www.analyticsvidhya.com/blog/2016/07/practical-guide-data-preprocessing-python-scikit-learn/

- https://scikit-learn.org/stable/modules/preprocessing.html

CODE FOR REFERENCE:

https://github.com/Meghanshi999/17IT087_DATASCIENCE/blob/main/87_DS_PRAC1.ipynb

Comments

Post a Comment